There’s no penalty for jumping the gun

On your marks. Get Set. Go. When the starting gun goes off, there is always going to be a rush of adrenalin, a surge of excitement, and a striving to get up to speed and do your best.

But when the starting gun goes off in relation to a Gartner Magic Quadrant (MQ) assessment of your company, in many ways it is already too late.

But when the starting gun goes off in relation to a Gartner Magic Quadrant (MQ) assessment of your company, in many ways it is already too late.

Magic Quadrants generally appear once a year. For the companies who are on the receiving end, they can be make or break factors, with a huge influence on business prospects for the year ahead.

For the analysts involved, they are important pieces of work, but they have to be fitted in alongside research reports, client inquiries and meetings, events and presentations, custom engagements, webinars, blogs, and a host of other commitments. Leaving all the rest of an analyst’s annual workload aside, producing a Magic Quadrant means identifying and investigating multiple companies that will appear in the final diagram. On top of this, the analyst has to give due consideration to all the peripheral candidates that need to be evaluated before decisions can be taken about whether or not they should be included.

The wonder is not that so many MQ assessments leave so many vendors feeling disappointed, but that so many MQs win general acceptance as being pretty fair, diligent, and useful assessments of the state of play in particular markets.

Perspectives can’t be changed overnight

The key to making yourself visible and understood, as a small or medium-sized vendor, and getting the most positive assessment your performance would justify, is to make sure you are off and running well before the starting gun is fired.

That may sound paradoxical, but Magic Quadrants don’t come out of the blue. As mentioned earlier, they appear roughly yearly. And the best time to start preparing for a successful assessment is 12 months ahead, immediately after an MQ has been published.

Even then, there’s a lot to do in the time available.

If you believe the analyst specializing in your area ought to be adjusting his or her perspective and reframing the way your sector is viewed, you need to be making contact, briefing the analyst, and putting forward evidence to support that change of standpoint very early in the year. As the process gathers pace, your chances of influencing the analyst’s view of market issues and priorities rapidly decline.

Right from the start, you need to be reviewing the factual evidence that will demonstrate your capabilities, identifying the gaps in it, and starting to gather or commission the elements you will need to support your case.

That means lining up the right references and collecting relevant case studies and customer success stories. It may mean commissioning customer surveys or market survey work that will lend strength to your arguments and credibility to your strategy. It will certainly involve making sure you have clean, presentable internal data relating to sales, marketing, and financial solidity and performance.

Your bigger competitors know the score

If you do not start preparing all this evidence early, you will simply run out of time. But where big corporations put money and resources into analyst relations teams to prepare their evidence and put the story across, smaller firms cannot take that approach. If you can’t slug it out toe-to-toe, you have to be smart about what evidence you are going to collect and how you are going to deploy it.

The material that’s required will need to be thought about, prepared and substantiated as far in advance as possible. You’ll need a story, a plan, and an evidence pack – and you’ll need them all sooner rather than later, so the sooner you start, the better placed you will be.

Once the actual assessment process begins, you will find yourself staring at a proforma list of maybe 50 questions, many of which will leave little scope for you to tell the story you want to tell. Within the formal process you may have the chance to do a product demonstration and possibly a briefing with the analyst. But the formal assessment period is short, and there certainly won’t be time to go gathering customer views or commissioning market surveys to back up your arguments. You need this ammunition in your locker before the clock starts ticking.

And there’s another reason why this is so essential. Magic Quadrants rate vendors in a relative way, not in absolute terms. They are essentially competitive. If you are not seen to be going forward, relative to your competitors, you may well find your dot moving backwards. It’s dot eat dot. That is why the bigger companies you’re up against employ the full-time analyst relations staff that you can’t afford.

But, believe me, the morning after the MQ is published, these people will be coming straight into the office, rolling their sleeves up, and starting work on preparation for the next one. If you’re going to compete successfully, you can’t afford to give them a head start.

![]() Are we on target? The last thing we want is everyone agreeing with what goes into this blog. After all, if you don’t disagree with some of the points we’ve raised, we’ll be forced to be more and more provocative, and who knows where that will end? So let us have your thoughts. Have you seen the payoffs from preparing your evidence early? Or do you feel MQ assessments are a lottery, whatever you do to make your case? Shoot us down and have your say.

Are we on target? The last thing we want is everyone agreeing with what goes into this blog. After all, if you don’t disagree with some of the points we’ve raised, we’ll be forced to be more and more provocative, and who knows where that will end? So let us have your thoughts. Have you seen the payoffs from preparing your evidence early? Or do you feel MQ assessments are a lottery, whatever you do to make your case? Shoot us down and have your say.

Posted by theskillsconnection

Posted by theskillsconnection

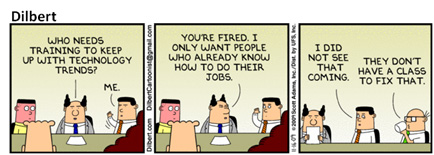

What is the role for managers in an associate’s development? If the manager sends an associate to a training workshop or identifies an appropriate e-learning program .. is it enough?

What is the role for managers in an associate’s development? If the manager sends an associate to a training workshop or identifies an appropriate e-learning program .. is it enough? In other words, “what’s the story?”

In other words, “what’s the story?” This is a key issue moving forward for any company as the breadth and speed of communication get ever wider and faster. A comment made in private by phone may appear on a blog and be under intense discussion through Twitter in a matter of seconds. If you don’t believe me just check the web stats for your business and look at the sources for people coming to look at your site. More and more these are not referring sites but blogs and Twitter posts!

This is a key issue moving forward for any company as the breadth and speed of communication get ever wider and faster. A comment made in private by phone may appear on a blog and be under intense discussion through Twitter in a matter of seconds. If you don’t believe me just check the web stats for your business and look at the sources for people coming to look at your site. More and more these are not referring sites but blogs and Twitter posts! According to Brooks, “this is a look at how tribal dominance struggles get processed inside.” Although my takeaway, as a lifelong Yankees fan, was “I didn’t know Red Sox fans had brains.”

According to Brooks, “this is a look at how tribal dominance struggles get processed inside.” Although my takeaway, as a lifelong Yankees fan, was “I didn’t know Red Sox fans had brains.”